Role

Spatial Computing Researcher

Tools

Unity3D, OpenCV, Azure Kinect

Focus

VR/AR, Human-Computer Interaction

Duration

May - Aug 2023

Results

<100ms Latency

CyLab

@ Carnegie Mellon University

Probably my most favorite and also the most complex project I've worked on.

Depth Cameras, Point Clouds, Human Pose Detection, 3D modeling, and Virtual Reality Interface Design. All of that in one project.

I would regard this as "the complete package" project experience, majorly the reason being the perfect balance and blend of the developer role and the design work.

Long story short

If I were to put it in a nutshell, my journey and the work I performed in this project, this would be it.

Understanding the workspace

The entire enviroment being so alien to me, adapting to the ambience of cameras and sensors was the first step.

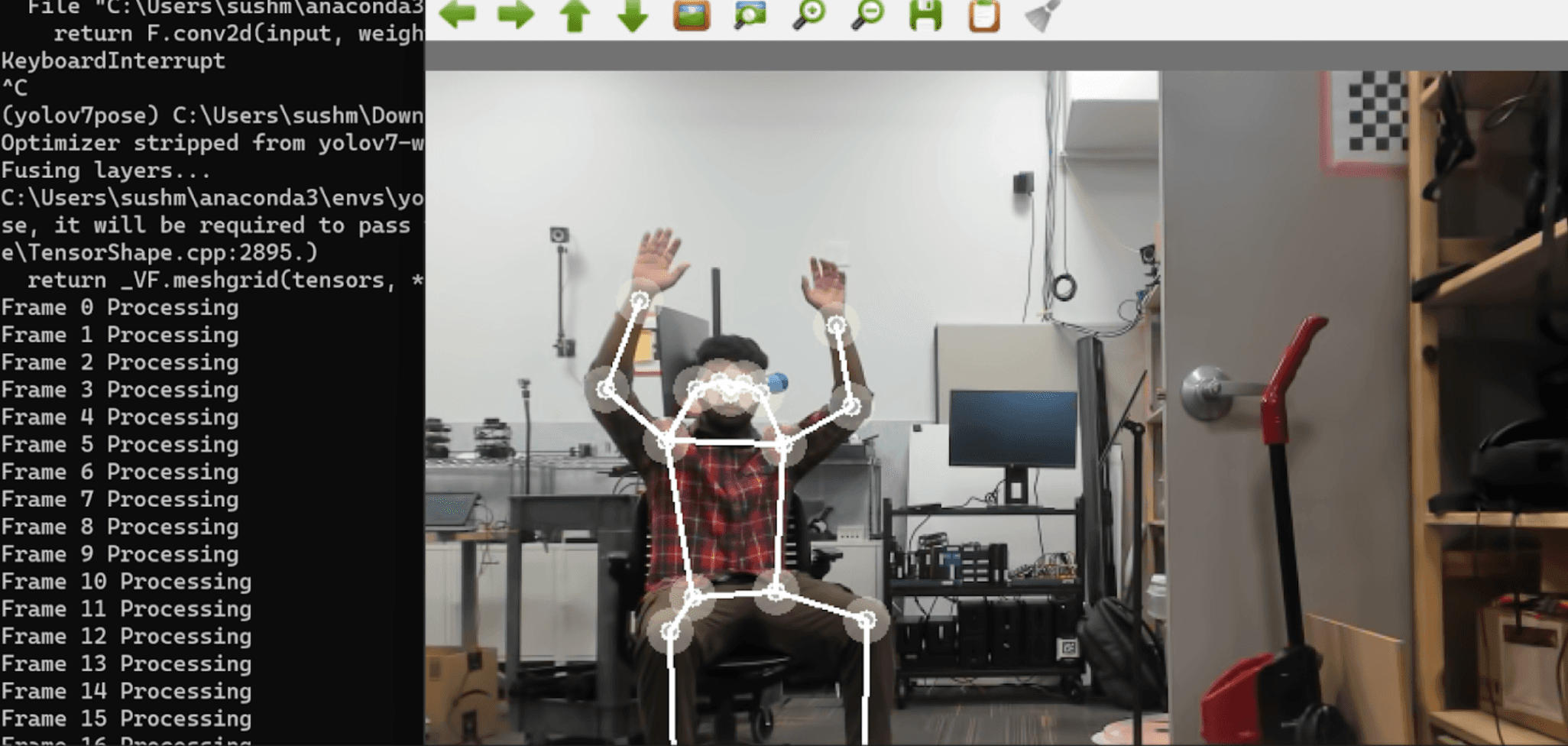

Human Pose Estimation

Developing and testing several pose detection and tracking models for real time human pose estimation was the next block in the pipeline.

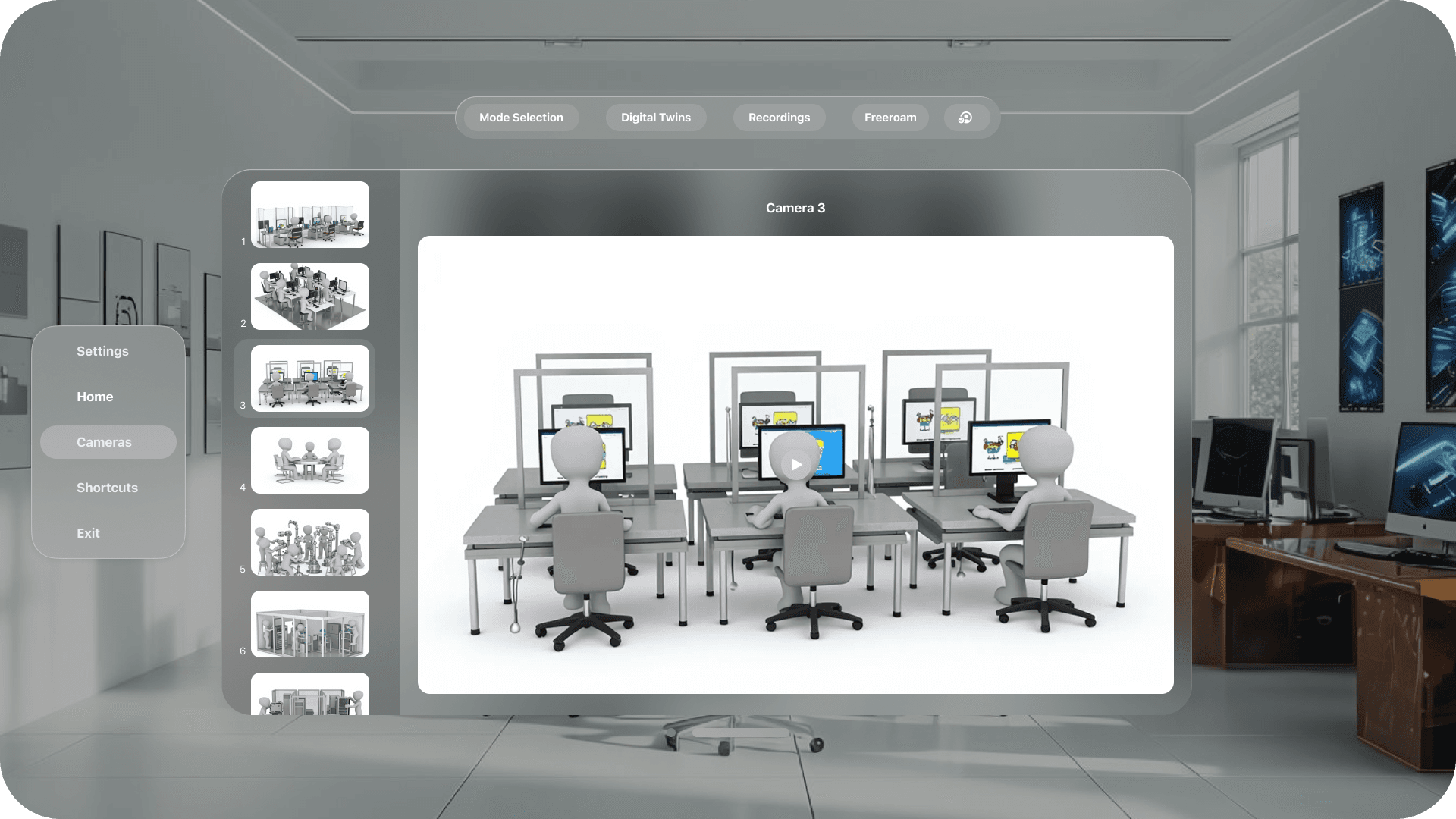

VR/AR Interface Design

I was so glad I could reach this point in the project roadmap. Designing the most user friendly interface was the final step.

Prototyping and Validation

Nothing is complete until it is well tested and used. The design had to go through numerous iterations before the final draft could make the cut.